Understanding the inner workings of the Java Virtual Machine (JVM) is essential for grasping why Java remains a cornerstone of modern programming. From enabling platform independence to driving innovation, the JVM is pivotal in ensuring Java's adaptability and reliability across diverse applications.

This article examines how the JVM revolutionizes programming. Dive in to explore the secrets behind Java's versatility and lasting success! Let's start with clearly understanding the JVM and why it matters.

Definition of Java Virtual Machine: Core Concepts

JVM refers to the Java Virtual Machine, a core piece that oversees application memory management while offering a portable execution environment for Java-based applications. This enables developers to benefit from enhanced performance, stability, and consistent runtimes.

At its core, the JVM executes programs with simplicity and efficiency that have redefined the programming landscape. Its innovative architecture continues to drive advancements in modern software development, making it a critical tool for developers seeking stability, scalability, and cutting-edge performance.

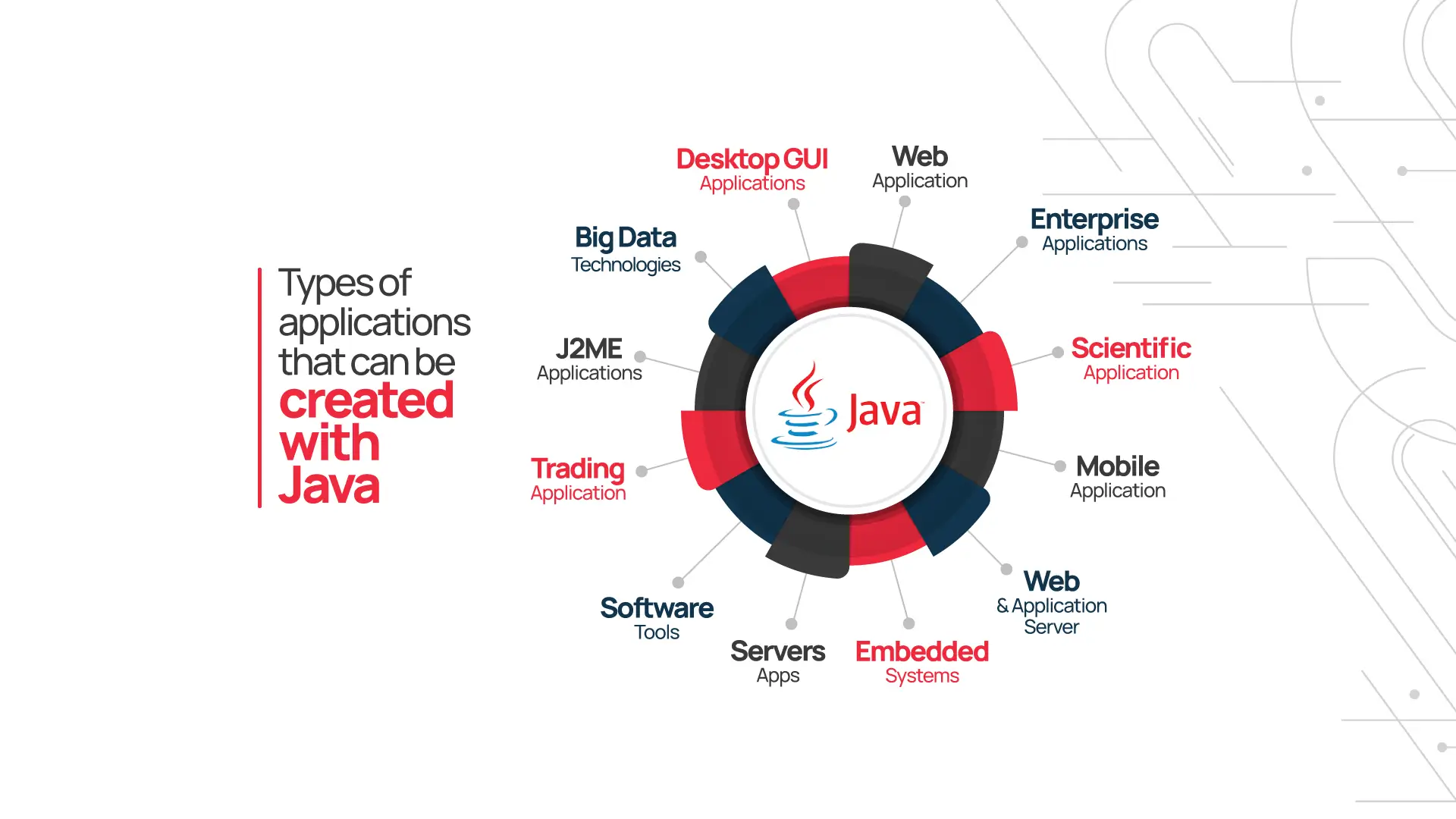

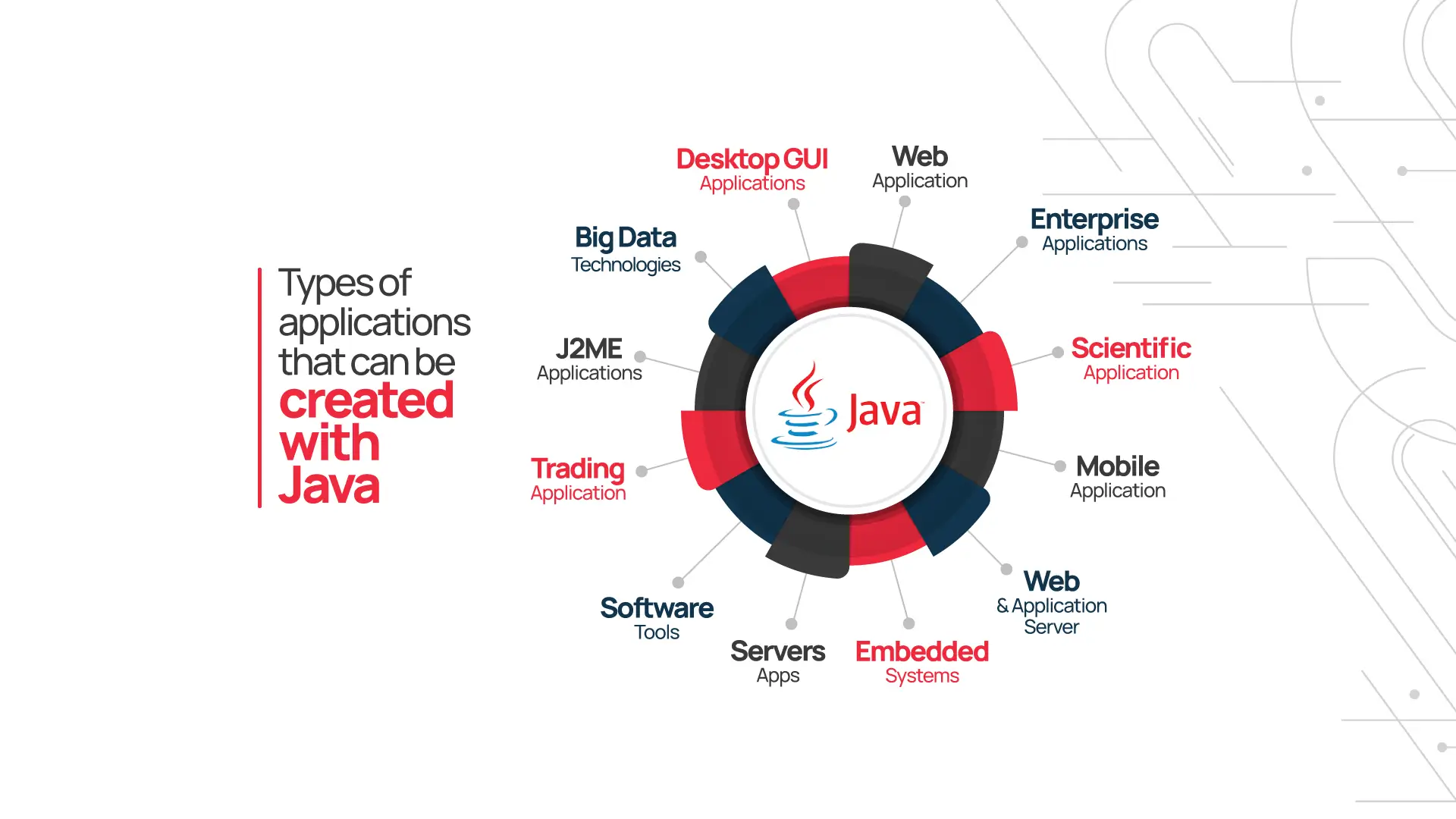

At the same time, it powers applications for some of the most successful companies that use Java, including tech giants and enterprises in finance, e-commerce, fintech, and healthcare.

What is JVM and its Purpose?

To understand the Java Virtual Machine, we should first address what the JVM is and its purpose. The Java Virtual Machine (JVM) is a key component of the Java Runtime Environment (JRE), and it is responsible for executing Java programs.

It acts as an abstraction layer between the compiled Java code and the underlying hardware, enabling platform independence. Its primary purpose is to provide a runtime environment for Java applications, ensuring portability, security, and performance.

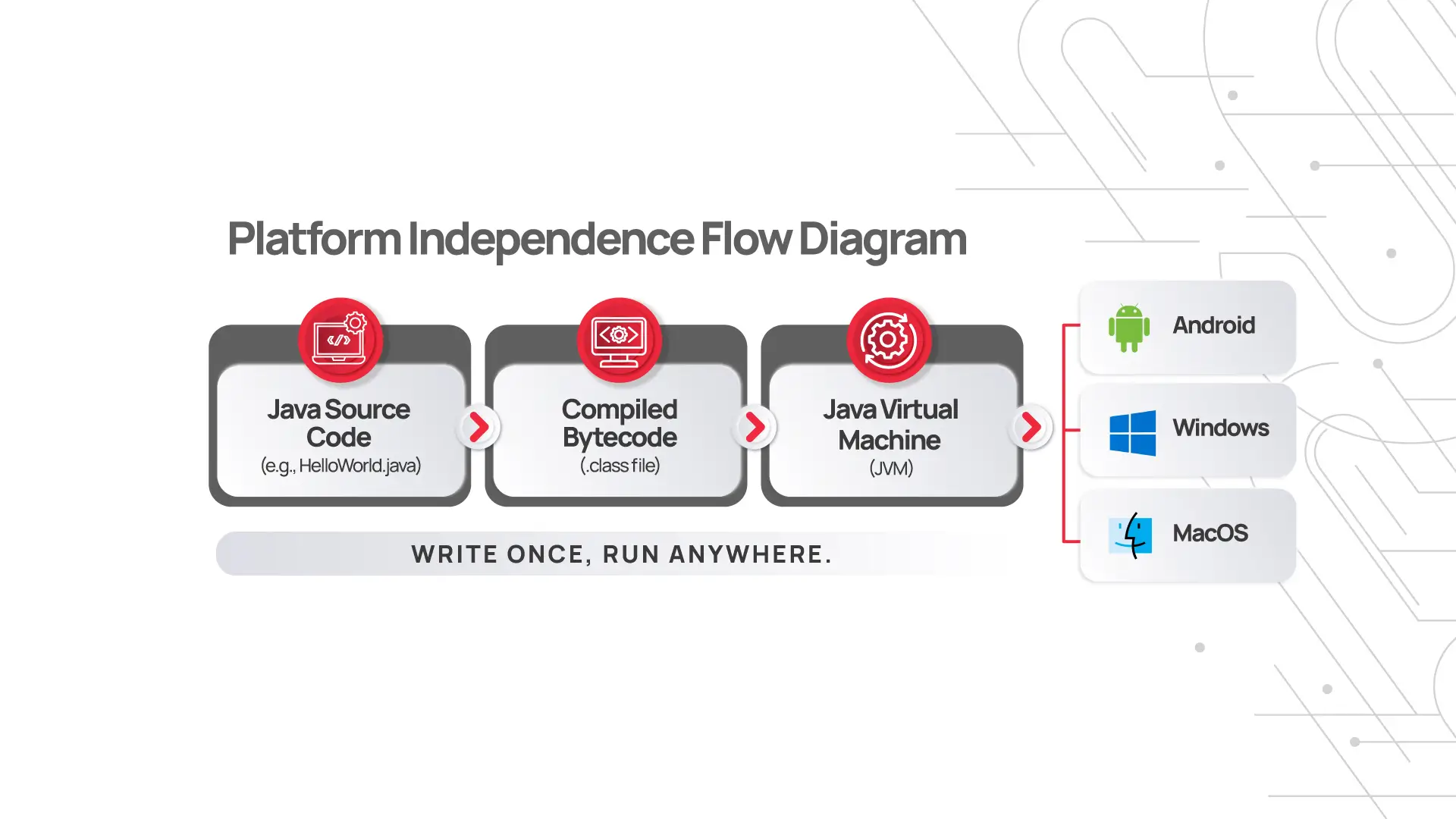

The Java Virtual Machine (JVM) is a cornerstone of the Java ecosystem, serving as the execution engine that powers Java's defining feature: "write once, run anywhere."

It ensures a consistent runtime environment for executing Java bytecode, the output of the Java compiler. It abstracts hardware and operating system specifics, allowing Java applications to run seamlessly on any compatible platform, fostering cross-platform compatibility and reducing development complexity.

The Role of JVM in the Java Ecosystem

Beyond execution, the JVM is pivotal in resource management and application performance within the Java ecosystem. It handles memory allocation, garbage collection, and multithreading, ensuring efficient use of system resources.

The JVM also incorporates a Just-In-Time (JIT) compiler to optimize performance by translating frequently used bytecode into native machine code at runtime. Moreover, it provides robust security mechanisms, such as bytecode verification and sandboxing, safeguarding applications against malicious code.

The JVM's versatility is further highlighted by its ability to host other JVM-compatible languages like Kotlin, Scala, and Groovy, making it a foundational pillar of the broader Java ecosystem.

Platform Independence: The Essence of JVM

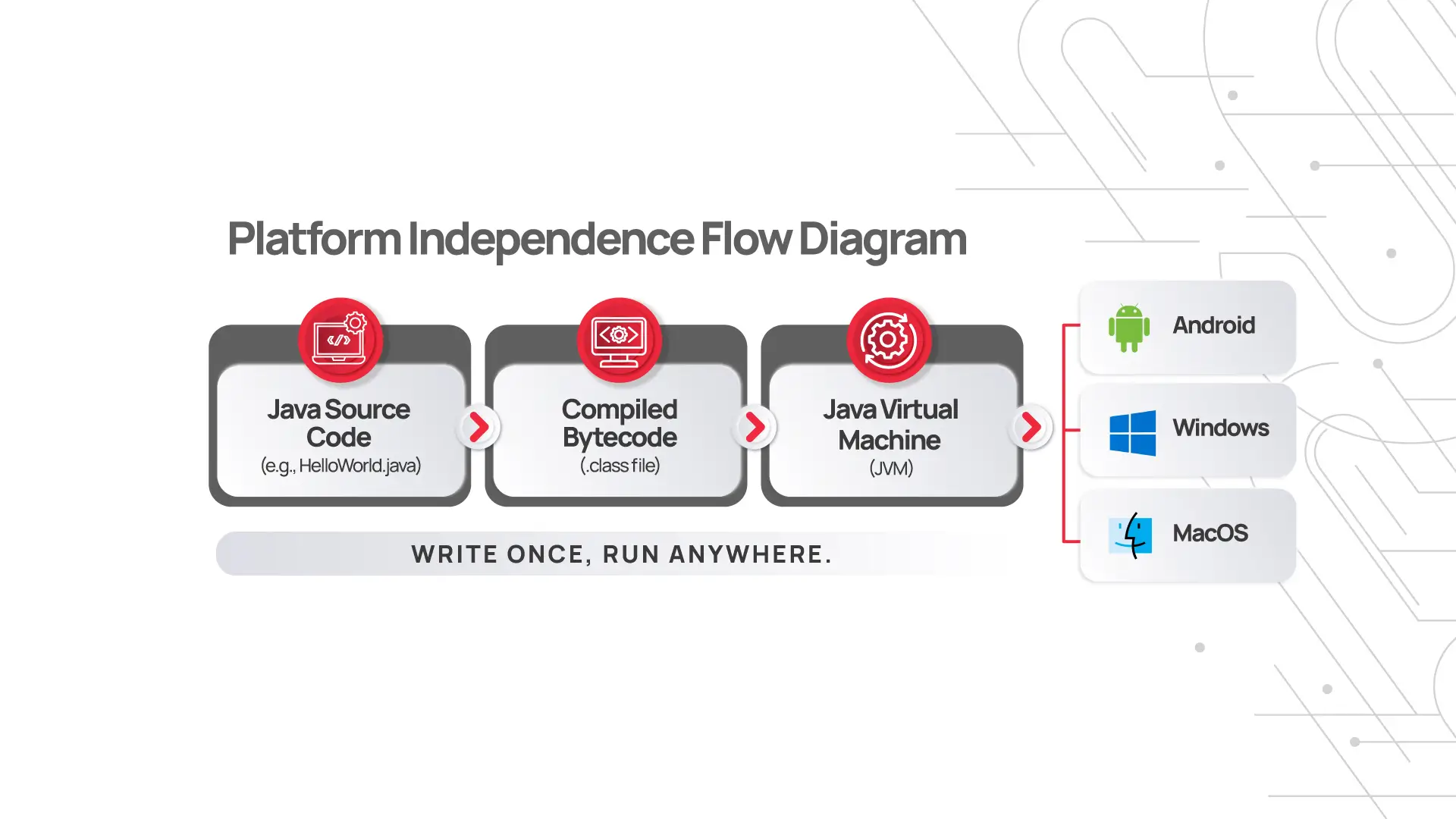

At its core, the JVM embodies platform independence, which is the foundation of Java's "write once, run anywhere" philosophy. The JVM achieves this by serving as an intermediary between Java bytecode and the underlying hardware or operating system.

But how does this work? When Java code is compiled, it is transformed into bytecode, a universal, platform-agnostic format. This bytecode is not directly executed by the operating system but is interpreted or compiled into native machine code by the JVM specific to the host platform.

This design ensures that the same Java program can run seamlessly on any device or system equipped with a compatible JVM, regardless of differences in hardware architecture or operating systems.

The essence of platform independence in the JVM extends beyond mere portability. It standardizes application behavior across diverse environments, simplifying development and testing while reducing compatibility issues. Developers can focus on writing high-quality code without worrying about system-specific details.

This independence also enables Java to thrive in a wide range of domains, from desktop applications and web servers to mobile devices and embedded systems.

Applying JVM Insights to Your Java Project

If you’re planning or currently developing a Java project, a deep understanding of how the JVM manages memory, security, and multithreading is crucial. Your business can optimize your application’s performance and scalability across various operating systems by leveraging the JVM's platform independence and Just-In-Time compilation.

Whether you’re building enterprise services, mobile applications, or innovative IoT solutions, harnessing the JVM’s robust features ensures that your Java project meets modern demands for security, reliability, and efficiency. For organizations that lack sufficient in-house expertise, a practical approach is to outsource Java development to specialized partner.

The outsourcing strategy can help you quickly assemble a team of qualified Java engineers expert, ensuring on-time delivery and reducing overhead. Whichever path you choose, focusing on these key areas will help your Java applications reach their full potential.

Architecture of the JVM: Breaking Down Components

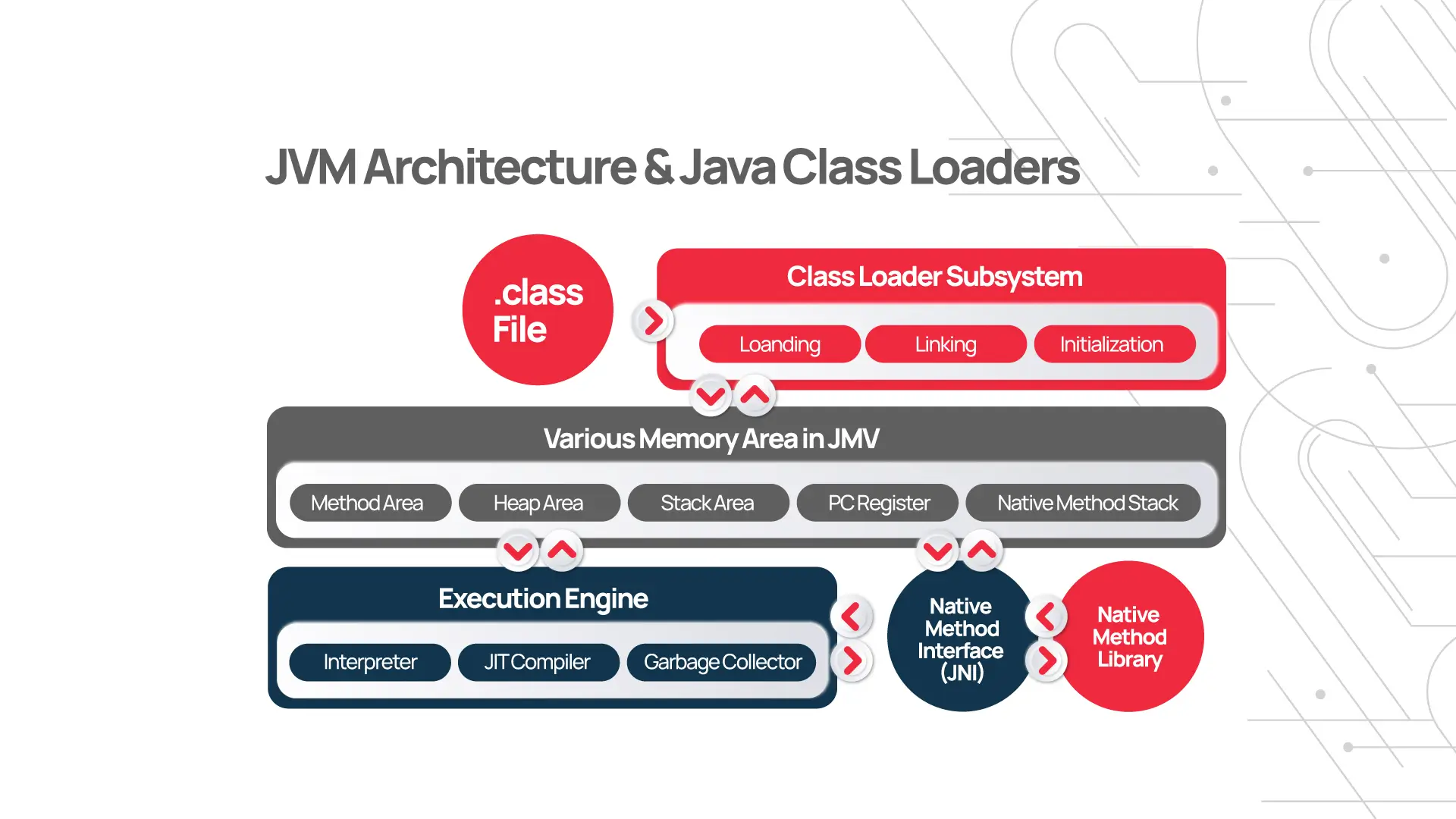

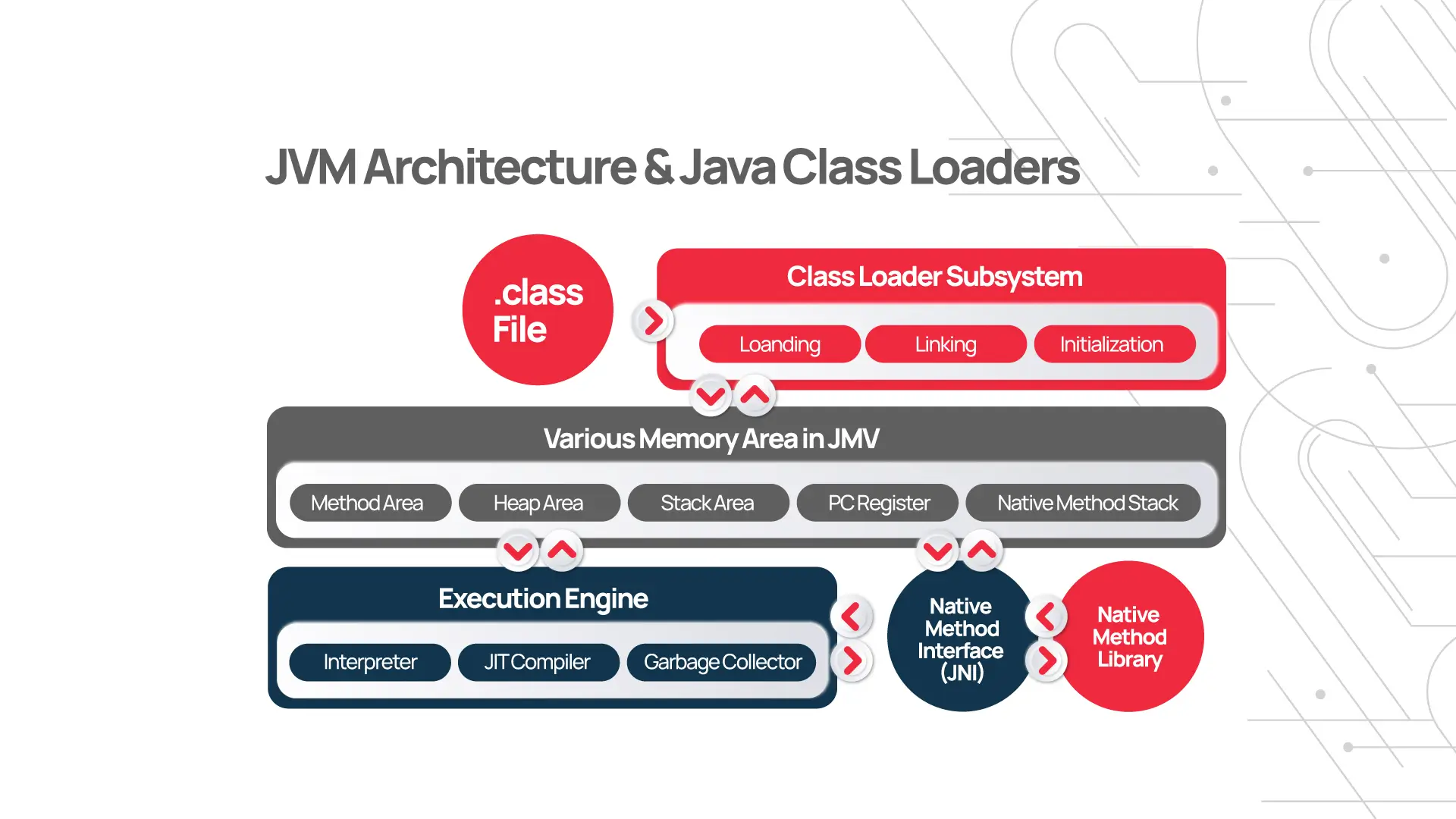

The architecture of the JVM consists of several components. We will explore its key aspects, starting with the class loader subsystem and its functionality. Ready?

The Class Loader Subsystem and Its Functionality

The Class Loader Subsystem is responsible for dynamically loading, linking, and initializing classes during runtime. It eliminates the need for manual inclusion of external libraries or components, providing flexibility and modularity in Java applications. When a Java program references a class, the Class Loader Subsystem locates the corresponding bytecode, loads it into the JVM's memory, and prepares it for execution.

This dynamic behavior supports Java's extensibility and facilitates updates or additions to applications without recompilation. On the other hand, we can find the JVM memory management, a sophisticated system designed to optimize resource utilization and ensure smooth execution of Java applications.

JVM Memory Management: Areas and Allocation

The JVM organizes memory into distinct areas, each serving a specific purpose: Heap, Stack, Method Area, Program Counter Register, and Native Method Stack. These areas work together to allocate, manage, and reclaim memory efficiently during runtime:

The Heap is the largest memory area used to store objects and class-level variables. It is managed by the garbage collector, which reclaims memory from unused objects to prevent memory leaks.

The Stack is dedicated to storing method-specific data, such as local variables, method calls, and references, with a Last-In-First-Out (LIFO) approach. Each thread has its own stack, ensuring thread safety.

The Method Area stores class metadata, including method code, static variables, and runtime constant pool, while the Program Counter Register tracks the address of the current instruction being executed by each thread.

The Native Method Stack supports native method execution, allowing Java to interface with non-Java code.

This structured approach ensures that memory is allocated and deallocated dynamically, enabling robust application performance and preventing critical issues like memory leaks and stack overflows.

Execution Engine: How the JVM Executes Code

The Execution Engine is another vital component of the JVM, serving as the core responsible for running Java bytecode. It bridges the gap between the platform-independent bytecode and the underlying hardware, ensuring the application runs efficiently on any device with a compatible JVM.

The Execution Engine utilizes interpretation, Just-In-Time (JIT) compilation, and native code execution to convert bytecode into machine code executable by the host system.

Initially, the Execution Engine interprets bytecode instructions sequentially, converting them into machine-level instructions at runtime. To optimize performance, the JIT compiler is employed, which compiles frequently executed bytecode into native machine code, storing the results for future use.

This hybrid approach balances flexibility and performance, as interpretation is lightweight and fast to start, while JIT compilation enhances execution speed for long-running applications.

Additionally, the Execution Engine interacts with native libraries through the Native Interface, enabling Java to leverage platform-specific features when necessary.

Interaction with Native Code: Java Native Interface (JNI)

In this context, it is important to highlight the Java Native Interface (JNI), a framework that allows Java applications to integrate with native code written in languages such as C or C++.

This interaction is crucial for integrating platform-specific features, accessing low-level hardware capabilities, or leveraging existing native libraries. JNI acts as a bridge, allowing bidirectional communication between the Java Virtual Machine (JVM) and native applications or libraries.

Through JNI, Java applications can call native functions and pass data between the managed Java environment and the unmanaged native code. The JVM provides a set of APIs and conventions for loading native libraries, mapping Java methods to native functions, and converting data types between Java and native languages. Native methods are declared in Java with the native keyword and implemented in the corresponding native language.

Read this and uncover the battle between C# and Java for software development supremacy.

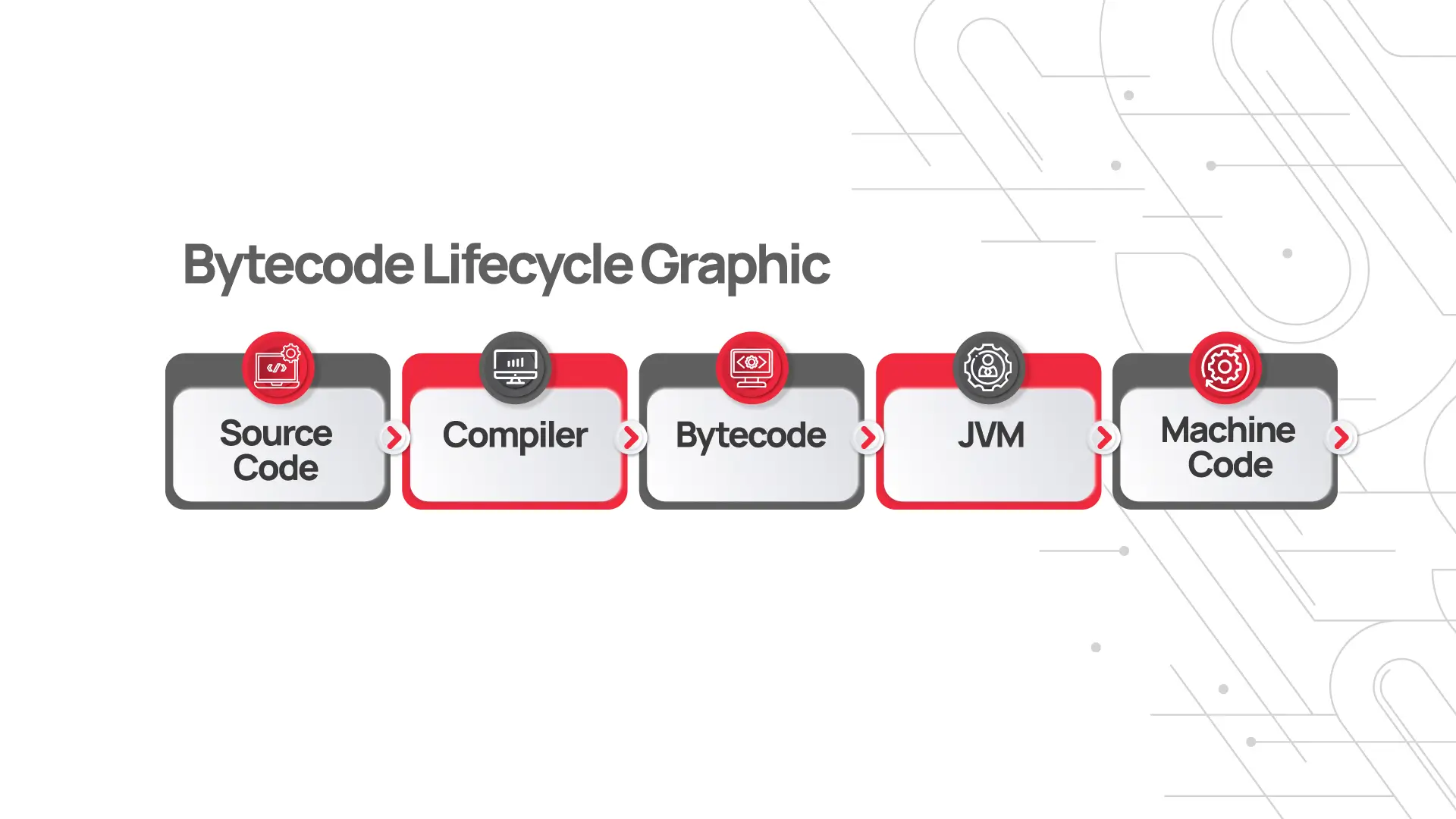

Bytecode and Compilation: Understanding the Process

What is Bytecode and its Significance?

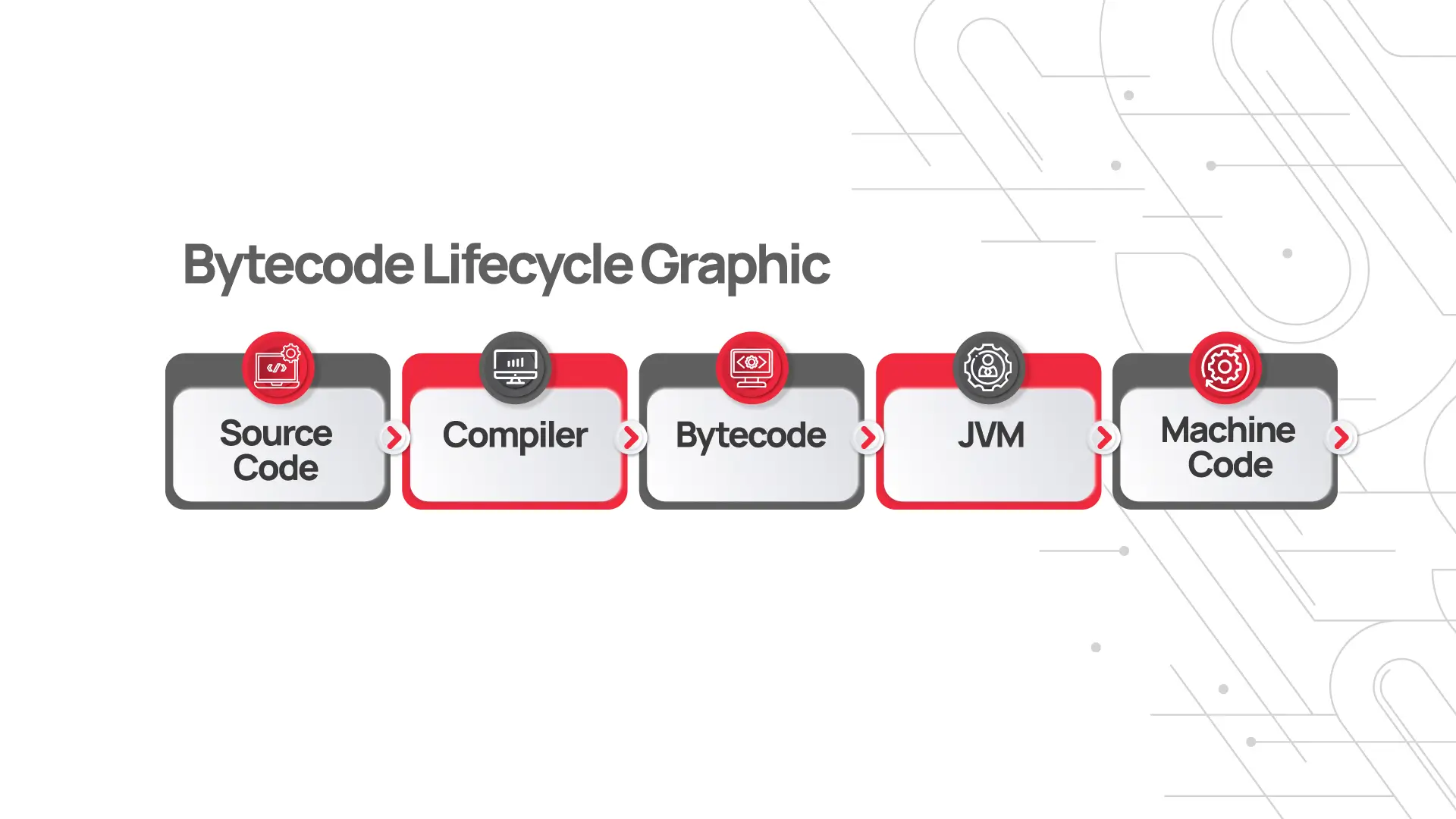

We have referenced Java bytecode, but what exactly is it, and why is it particularly significant? Bytecode is an intermediate, platform-independent representation of a Java program generated after the source code is compiled by the Java Compiler (javac).

It serves as the executable format for the Java Virtual Machine (JVM), bridging the gap between high-level Java code and low-level machine code. Bytecode is stored in .class files and consists of compact, standardized instructions that the JVM interprets or compiles into native machine code during runtime.

We have already mentioned Java's "write once, run anywhere" principle. The bytecode is a cornerstone in this aspect as it abstracts the hardware and operating system-specific details. This way, bytecode allows Java applications to run seamlessly on any platform with a compatible JVM.

This makes Java highly portable and versatile for cross-platform development. Additionally, bytecode is optimized for performance and security, as the JVM verifies its correctness and prevents malicious behavior before execution.

How the JVM Interprets Bytecode

The JVM interprets bytecode through its Execution Engine, which translates the intermediate bytecode into machine-specific instructions that the host system can execute. When a Java program is compiled, the source code is converted into bytecode.

The JVM reads this bytecode during runtime, interpreting it one instruction at a time. This interpretation process involves the JVM's Interpreter, which reads the bytecode, decodes each instruction, and then executes it on the underlying machine.

The Just-in-Time (JIT) Compiler: Enhancing Performance

While interpretation allows for platform independence and flexibility, it is generally slower compared to directly executing native machine code. To optimize performance, the JVM incorporates Just-In-Time (JIT) Compilation. The JIT compiler identifies frequently executed sections of bytecode and compiles them into native code, allowing those parts of the program to run faster in subsequent executions.

Instead of interpreting bytecode instruction by instruction, the JIT compiler identifies frequently executed parts of the code, called hot spots, and compiles them into optimized machine code. This conversion happens dynamically while the program is running, allowing the JVM to optimize performance based on actual usage patterns rather than theoretical scenarios.

The primary benefit of the JIT compiler is its ability to improve execution speed over time. This hybrid approach—initially interpreting bytecode and later using compiled machine code—strikes a balance between fast startup and long-term performance optimization, ensuring efficient execution of Java programs across diverse platforms.

Bytecode Verifier: Ensuring Safety and Security

One key aspect we haven’t addressed yet is safety and security. This is why the Bytecode Verifier is an essential security feature of the Java Virtual Machine (JVM) that ensures the integrity and safety of the bytecode before it is executed.

When a Java program is compiled, the resulting bytecode is subjected to verification by the Bytecode Verifier to ensure that it adheres to the JVM's strict rules and does not contain any unsafe operations.

This process helps prevent malicious or corrupted code from harming the system or causing unintended behavior, such as accessing unauthorized memory areas or violating Java’s access control restrictions.

Garbage Collection in JVM: Memory Management Techniques

In the Java Virtual Machine (JVM), Garbage Collection (GC) is a crucial memory management technique designed to automatically reclaim memory used by objects that are no longer needed, ensuring efficient resource utilization and preventing memory leaks.

Overview of Garbage Collection Algorithms

The JVM employs various garbage collection algorithms, each optimized for different scenarios.

Modern JVMs typically use Generational Garbage Collection, which divides the heap into multiple generations, such as the Young Generation (for newly created objects), Old Generation (for long-lived objects), and Permanent Generation (or Metaspace, in newer JVM versions).

This strategy optimizes garbage collection by frequently collecting the Young Generation, where most objects are short-lived while collecting the Old Generation less frequently.

How Garbage Collection Impacts Performance

Garbage Collection (GC) plays a vital role in managing memory in the Java Virtual Machine (JVM), but its execution can significantly impact the performance of Java applications.

The primary effect of GC on performance is the pause time or stop-the-world events, during which the application’s execution is paused while the garbage collector reclaims memory. These pauses can range from a few milliseconds to several seconds, depending on the size of the heap, the GC algorithm used, and the number of live objects in memory. If the garbage collection is too frequent or takes too long, it can introduce noticeable latency, affecting application responsiveness and throughput.

The type of garbage collection algorithm used also influences performance. For instance, the Generational Garbage Collection improves performance by collecting short-lived objects more frequently in the Young Generation, but this can still cause delays when transitioning objects to the Old Generation or during full heap collections.

Parallel Garbage Collection can alleviate some of the performance hits by utilizing multiple threads to perform garbage collection tasks, which can speed up the process, especially in multi-core systems.

However, these benefits come at the cost of increased CPU usage. On the other hand, Incremental Garbage Collection aims to reduce pause times by breaking the GC process into smaller steps, though it may require more CPU resources over a longer period.

Customizing Garbage Collection in JVM

Garbage Collection (GC) is customizable in the Java Virtual Machine (JVM). This allows developers to optimize memory management and fine-tune application performance based on specific needs.

The JVM provides several garbage collection algorithms, each suited for different use cases.

Developers can customize other JVM parameters to further optimize garbage collection. These include adjusting heap size (-Xms for initial and -Xmx for maximum heap size) to control memory allocation, setting the number of GC threads (-XX:ParallelGCThreads or -XX:ConcGCThreads) to take advantage of multi-core processors, and enabling GC logging (-Xlog:gc*) for detailed performance analysis.

This way, developers can reduce GC pauses, improve throughput, and balance memory usage according to the specific requirements of their applications, though careful testing and monitoring are essential to ensure optimal performance.

Multithreading and Synchronization in JVM

Thread management in the Java Virtual Machine (JVM) is crucial for enabling concurrent execution of Java applications, making efficient use of multi-core processors, and improving application performance.

Each thread in the JVM is essentially an independent unit of execution, and the JVM is responsible for managing its lifecycle, including its creation, scheduling, and termination.

Thread Management: Roles and Responsibilities

The Java Thread Scheduler is a key component of JVM thread management, responsible for allocating CPU time to each thread based on priority and operating system policies.

It operates in a cooperative multitasking model, ensuring that threads share system resources efficiently and execute according to their assigned priorities.

The JVM also handles thread synchronization, which is essential for managing concurrent access to shared resources and preventing data corruption in multi-threaded environments.

Synchronization Mechanisms in JVM

Synchronization mechanisms, such as the synchronized keyword and explicit locks (ReentrantLock), allow threads to safely access shared data by ensuring that only one thread can access a resource at a time. This prevents race conditions, where multiple threads could modify the same data simultaneously, leading to unpredictable behavior.

Additionally, the JVM provides tools like thread-safe collections and atomic variables to simplify thread management and synchronization. Proper thread management and synchronization in the JVM ensure that applications are both efficient and reliable in a multi-threaded environment.

Challenges of Concurrent Programming in JVM

One important issue to address is concurrent programming, as it presents several challenges that developers must navigate to ensure the safe and efficient execution of multi-threaded applications.

One of the primary challenges is dealing with race conditions, where multiple threads access and modify shared data simultaneously, leading to unpredictable behavior. Proper synchronization is required to prevent these issues, but overuse of synchronization can lead to deadlocks—a situation where two or more threads are blocked indefinitely, waiting for resources held by each other.

Deadlocks occur when threads hold locks and wait for others to release theirs, creating a cycle of dependencies that can freeze application progress.

Another challenge is thread contention, which happens when multiple threads compete for access to limited resources, such as CPU time, memory, or locks.

This can lead to performance bottlenecks, especially in highly concurrent applications. Developers need to carefully manage the number of threads and the resources they require to avoid excessive context switching, which occurs when the operating system has to repeatedly switch between threads, reducing overall efficiency.

Additionally, the JVM's garbage collection process can introduce unpredictable pause times, which may impact the performance of real-time or low-latency applications.

Read this to find out which programming language is best for you by comparing Go and Java. But if you need to learn how to choose the right programming language for your software project read this.

JVM Languages: Beyond Java

The Java Virtual Machine (JVM) is not limited to running just Java; it supports a variety of programming languages, allowing developers to take advantage of the JVM's features like memory management, garbage collection, and platform independence while writing code in different languages.

Two popular languages that run on the JVM are Kotlin and Scala, both of which provide modern, concise, and expressive alternatives to Java.

Languages that Run on JVM

Kotlin, developed by JetBrains, is a statically typed language designed to be fully interoperable with Java. It simplifies syntax, reduces boilerplate code, and introduces features like null safety, extension functions, and data classes, making it a popular choice for Android development.

Kotlin compiles directly to JVM bytecode, allowing developers to seamlessly use existing Java libraries and frameworks while benefiting from Kotlin's more modern features.

Scala, on the other hand, combines object-oriented and functional programming paradigms. It supports features like immutability, higher-order functions, and pattern matching, making it suitable for writing concise, expressive code.

Scala also runs on the JVM and is often used in areas like big data processing (e.g., with Apache Spark) due to its powerful concurrency and functional programming capabilities.

Discover the top 20 programming languages and stay ahead of the competition. Get insight into its future, predictions for the next decade,, and emerging technologies' impact.

Advantages of Using JVM for Other Languages

Both Kotlin and Scala showcase the JVM's flexibility and ability to support a wide range of programming languages, each with its unique features and advantages, while leveraging the robust infrastructure of the JVM.

Using the Java Virtual Machine (JVM) for languages beyond Java offers several significant advantages due to the powerful features and infrastructure the JVM provides.

One of the key benefits is platform independence. JVM compiles code into bytecode, which can be executed on any system with a compatible JVM, allowing developers to write code once and run it anywhere.

Additionally, the JVM offers robust memory management and garbage collection systems that reduce the complexity of manual memory management in other languages. These built-in features ensure efficient resource utilization, automatic memory cleanup, and reduced likelihood of memory leaks.

The JVM also benefits from a rich ecosystem of libraries, frameworks, and tools built for Java, which can be easily accessed by any language running on the JVM. This ecosystem allows developers to tap into a vast array of existing solutions for various needs, from web development to data processing, thus accelerating development.

With JVM-based languages like Kotlin and Scala, developers can also seamlessly interoperate with Java code, combining the advantages of both worlds while maintaining a high level of efficiency and performance.

Read this to learn all about the best coding languages for data sciences and how to choose them.

Security Features of the JVM: A Critical Overview

Regarding security, the JVM offers several robust security features to safeguard applications from potential threats and ensure system integrity.

One of the core security mechanisms is sandboxing, where it runs code in a restricted environment, limiting access to sensitive system resources. This is especially important when executing untrusted or remote code, such as Java applets. The sandboxing model prevents Java programs from performing harmful actions, like altering files or accessing the network, unless explicitly permitted by the system's security settings.

Additionally, we have already mentioned that the JVM uses bytecode verification as a crucial security feature. Before bytecode is executed, the Bytecode Verifier checks it for illegal or malicious instructions, ensuring it adheres to the Java language’s rules and cannot exploit vulnerabilities like memory access violations or type-safety issues.

The JVM also implements access control through security managers and security policies, which regulate what system resources a Java program can access. These policies can be customized to limit a program's actions based on its security context, providing fine-grained control over execution.

Together, these features help protect applications from malicious code while maintaining the flexibility and portability that the JVM offers.

How is the JVM Security Architecture Built?

The JVM security architecture is designed to protect applications and systems by providing several key components that ensure the safe execution of Java programs.

One of the most important elements is the Security Manager, which acts as a gatekeeper between the running Java program and the operating system. The security manager controls access to system resources, such as files, network connections, and system properties, based on the security policies defined for the application. It prevents unauthorized actions by enforcing these policies, which can be fine-tuned to allow or deny specific operations.

The Bytecode Verifier ensures that bytecode is valid and adheres to the rules of the Java language, while the Class Loader Subsystem is responsible for loading classes into the JVM, and it can be configured to restrict the loading of classes from untrusted sources, thus minimizing the risk of executing potentially harmful code.

Class File Verifier's Role in Security

When a class file is loaded, the Verifier checks the bytecode for consistency, type safety, and validity, ensuring that it does not violate any memory access rules or attempt to execute potentially dangerous operations.

This helps prevent common security vulnerabilities, such as buffer overflows, stack corruption, and invalid memory access, which could otherwise compromise the integrity of the system.

One common question that will surely arise is how to handle exceptions and security risks in JVM as improper exception handling can expose vulnerabilities and compromise application security.

Handling Exceptions and Security Risks in JVM

Exceptions are used to signal errors or unusual conditions during program execution, but poorly managed exceptions can lead to security risks like information leakage, denial of service, or improper application states.

The JVM provides a robust exception-handling mechanism through try-catch blocks, enabling developers to catch and handle errors gracefully, preventing abrupt terminations.

To mitigate these risks, the JVM provides mechanisms like security managers and code signing, allowing developers to limit the actions an application can perform during exception handling.

Customization and Configuration of JVM

Customizing and configuring the Java Virtual Machine (JVM) allows developers to optimize its behavior for specific application requirements, enhancing performance, memory usage, and resource management.

Command-Line Options for JVM Configuration

The JVM offers a variety of command-line options and configuration parameters to adjust its settings.

For example, developers can configure the heap size using -Xms (initial heap size) and -Xmx (maximum heap size) to control memory allocation, which can help improve performance by preventing frequent garbage collection cycles.

The JVM also provides options for selecting specific garbage collection algorithms, such as the G1 Garbage Collector (-XX:+UseG1GC) or Parallel GC (-XX:+UseParallelGC), each designed for different performance characteristics like throughput, pause times, and memory footprint.

Other customization options include adjusting the JVM thread management settings that we have already mentioned, such as the number of threads used for garbage collection (-XX:ParallelGCThreads) or configuring JVM logging to track garbage collection events, class loading, and other operations (-Xlog:gc*).

Additionally, JVM tuning can extend to enabling JIT compilation with settings like -server for optimizing performance on server environments or profiling options for analyzing performance bottlenecks.

Extending JVM Functionality: Plugins and Integrations

Plugins and integrations offer a powerful way to extend the capabilities of the Java Virtual Machine (JVM), enabling specialized functionality, performance enhancements, and streamlined development processes. Languages like Kotlin, Scala, and Groovy provide developers with advanced features and concise syntax, making code more expressive and maintainable.

Another important aspect of extending JVM functionality is the use of JVM plugins and libraries that integrate with other systems or provide additional tools for application development.

For example, tools like JVM profiling libraries and performance monitoring agents allow developers to gain deeper insights into application performance, memory usage, and thread behavior.

Additionally, integrating with technologies like Apache Spark (for big data processing) or Spring Framework (for enterprise application development) leverages the JVM’s flexibility to support complex enterprise architectures.

Through these plugins and integrations, the JVM evolves into a powerful, customizable runtime environment that can be tailored to meet specific application needs, whether in data processing, cloud computing, or real-time systems.

Future of JVM: Trends and Innovations

The future of the Java Virtual Machine (JVM) is increasingly tied to cloud computing and microservices as these technologies continue to evolve and shape modern application development.

The JVM's platform independence, mature ecosystem, and ability to run multiple languages on a single runtime make it an ideal candidate for cloud environments where scalability, flexibility, and efficiency are paramount.

JVM in Cloud Computing and Microservices

In cloud computing, JVM-based applications benefit from cloud-native capabilities like auto-scaling, containerization, and distributed computing. The JVM is well-suited for deployment in Docker containers or Kubernetes clusters, enabling microservices architectures that are loosely coupled, scalable, and resilient.

In the realm of microservices, JVM technologies such as Spring Boot and Quarkus are gaining significant traction. These frameworks optimize the JVM for cloud-native applications by providing quick startup times, low memory consumption, and simplified deployment.

Quarkus, in particular, is designed for containerized environments and focuses on reducing the JVM's overhead, enabling faster execution and making it a great fit for serverless computing.

As the adoption of serverless computing and cloud-native architectures continues to grow, the JVM will likely see innovations aimed at improving performance, reducing resource consumption, and enabling faster, more efficient runtime environments.

Delve into the world's fastest programming languages here! Explore the powerhouses of speed, efficiency, and performance.

The Evolution of JVM: Adapting to New Technologies

The evolution of the Java Virtual Machine (JVM) has been shaped by the need to adapt to emerging technologies and modern development practices. Originally designed for desktop applications, the JVM has continuously evolved to support new programming language trends, performance optimizations, and diverse deployment environments.

In response to the growing demand for cloud computing, microservices, and containerization, the JVM has undergone several significant enhancements, making it more efficient for modern architectures.

Introducing tools like GraalVM, a high-performance polyglot VM, allows the JVM to run applications written in multiple languages (e.g., Java, JavaScript, Ruby), expanding its versatility and supporting use cases such as serverless computing and edge computing.

Another key area of evolution is performance improvements. The JVM has increasingly embraced JIT (Just-In-Time) compilation and ahead-of-time (AOT) compilation, with optimizations aimed at reducing startup times and improving memory usage, especially in cloud-native environments.

As Java continues to be widely used in enterprise applications, the JVM is also evolving to better support reactive programming, functional programming, and other modern paradigms, ensuring its adaptability and longevity in the ever-changing landscape of software development.

JVM in the Era of Artificial Intelligence and Machine Learning

In the era of artificial intelligence and machine learning, the JVM is increasingly playing a significant role in adapting to the demands of today's world.

Traditionally associated with enterprise applications, the JVM is now supporting AI and ML workflows through a combination of performance optimizations, language interoperability, and the integration of specialized libraries.

The JVM ecosystem has seen the rise of ML libraries and tools like Deeplearning4j and Weka, which are optimized for use within JVM environments.

These libraries provide support for neural networks, decision trees, and other machine learning models, making the JVM a viable platform for AI and ML development.

Furthermore, Java’s platform independence and the ability to run on cloud infrastructure ensure that AI models can be deployed efficiently across various environments, including cloud services and edge devices.

As AI and ML workloads require more processing power, innovations in JVM performance, such as GraalVM and JVM-based accelerators, aim to enhance the speed and efficiency of these tasks, bringing AI and ML capabilities to JVM-powered applications.

In this way, the JVM is evolving to meet the computational demands of modern AI and ML applications while maintaining the stability and flexibility that has made it a staple in enterprise environments.

Curious about Oracle's latest innovation? Read this to learn about Java's "tip and tail" model.

Java Real Use Cases

Java's versatility makes it a staple in numerous industries. Some examples include:

Enterprise Software: Java's robustness and scalability are crucial for building complex systems for finance, CRM, and supply chain management. Think of large-scale applications that handle tons of data and need to be reliable.

Android Mobile Development: Java is a core language for Android app development, powering a vast number of apps you use daily.

Financial Sector: The financial industry relies on Java for its security and reliability in handling transactions and building trading platforms. Security is paramount here!

Big Data: Java's capabilities are leveraged in big data processing with frameworks like Hadoop, helping analyze massive datasets.

E-commerce: Java plays a vital role in e-commerce platforms. Major online retailers are among its top adopters because it ensures smooth transactions and efficiently handles large volumes of traffic.

Gaming: While not as dominant as it once was, Java is still used in game development, particularly for mobile games.

Java for IoT: Java’s versatility, scalability, security features, and compatibility with cloud services make it a compelling choice for developing robust and scalable Internet of Things (IoT) solutions.

Other Industries: Java's adaptability extends to other sectors, including government, healthcare, and education, where stable and high-performance applications are essential.

Conclusion: The Role of JVM in Modern Software Development

In conclusion, the Java Virtual Machine (JVM) remains a pivotal component in modern software development, continually adapting to new technologies and evolving paradigms while maintaining its core strengths of platform independence, security, and flexibility.

For those wondering if Java is still relevant, the answer lies in the JVM’s enduring adaptability and widespread integration with cutting-edge technologies.

As the backbone of the Java ecosystem, the JVM enables cross-platform compatibility, allowing applications to run seamlessly on any device or operating system with a JVM implementation. This foundational characteristic is complemented by the JVM’s ability to integrate with modern technologies such as cloud computing, microservices, and artificial intelligence (AI), positioning it as a key enabler of scalable, distributed, and data-driven systems.

The JVM’s adaptability is evident in its support for a diverse range of programming languages beyond Java, including Kotlin, Scala, and Groovy, providing developers with a broad toolkit for tackling complex problems.

Its robust memory management features, garbage collection, and security mechanisms ensure that applications run efficiently and securely, even in high-demand environments like cloud-native architectures and serverless computing.

Additionally, the JVM has evolved to support the growing demands of machine learning and big data processing, with frameworks like Apache Spark and libraries like Deeplearning4j expanding the JVM's role in AI-driven development.

In the era of microservices and containerization, the JVM continues to be relevant, with innovations like Quarkus and GraalVM optimizing it for faster startup times and reduced resource consumption.

As a result, the JVM remains a crucial technology in developing modern, high-performance, and scalable applications. For businesses seeking to outsource Java development services, Jalasoft’s expertise in Java development teams ensures reliable, innovative, and efficient solutions tailored to meet the complex needs of software development.

Its ability to integrate with cloud infrastructure, support diverse programming paradigms, and adapt to the needs of AI and machine learning ensures that it will remain a central part of the software development landscape for the foreseeable future.